Github Link:

Task 1 and Evaluation Policy

Task 1 includes two subcompetitions: CIFAR-10N and CIFAR-100N. Each team can choose to participate in any subcompetitions.

Task 1: Learning with Noisy Labels

1. Background

Image classification task in deep learning requires assigning labels to specific images. Annotating labels for training use often requires tremendous expenses on the payment for hiring human annotators. The pervasive noisy labels from data annotation present significant challenges to train a quality machine learning model.

2. Goal

The goal of this task is to explore the potential of AI approaches when we only have access to human annotated noisy labels (CIFAR-N). Specifically, for each label-noise setting, the proposed method will train only on the noisy labels with the corresponding training images. The evaluation is based on the accuracy of the trained models on the CIFAR test datasets.

This task does not have specific requirements on the experiment settings, i.e., the model architecture, data augmentation strategies, etc. However, the use of clean labels or pre-trained models on CIFAR datasets, or any other datasets, is not allowed.

3. Evaluation Metric

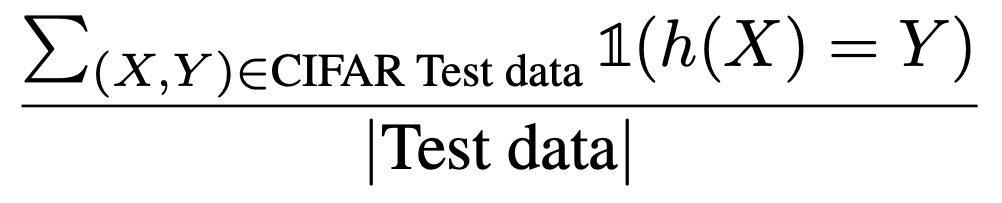

Each submission will be evaluated according to the model's achieved accuracy on the corresponding CIFAR-10/100 test data: denote by h the final model from the submission. We will use the following metric to make the final evaluation:

Note

The hyperparameter settings should be consistent for different noise regimes in the same dataset, i.e., there will be at most two sets of hyperparameters, one for CIFAR-10N (aggre, rand1, worst), one for CIFAR-100N.

4. Requirements

- Participants can only use standard training images for CIFAR and the CIFAR-N noisy training labels;

-

Can not use CIFAR-published training labels, test images, and test labels, to perform training and model selection;

- Participants can use our provided additional information (i.e., worker-id, work-time available at this Github).

- Learn from scratch. Any pre-trained model can not be used.

5. Submission Policy

Code Submission and Evaluation

- Participants must submit reproducible code with a downloadable link, e.g., GitHub;

-

The script run.sh for running the code must be provided.

- Environments must be specified in requirements.txt.

- We will run run.sh with 5 pre-fixed seeds. Each run will be evaluated w.r.t. a random selected subset of CIFAR-10/CIFAR-100 test data with replacement, and take the average performance of 5 runs.

- For CIFAR-10, there are three noise types: rand1, worst, aggre. Each participant will receive three ranks. No submission equals the last rank. Our evaluation metric is similar to Borda Count , and the score of the i-th ranked submission is given by max(11-i, 0). The accumulated scores over three noise regimes determine the final score.

- For CIFAR-100, there is only one dataset. The average performance over 5 seeds determines the winner.

- We will test the performance by learning.py for the learning task.

IMPORTANT:

This competition is time-constrained. We do not recommend spending too much time on CIFAR. Thus the training will be stopped at 10xBaselineTime. The baseline code (train with cross-entropy and ResNet34) is available at ce_baseline.py. For example, if you take 1 hour to run ce_baseline.py in your device, your method should not be longer than 10 hours. We will use the best model selected by noisy validation data within 10xBaselineTime.